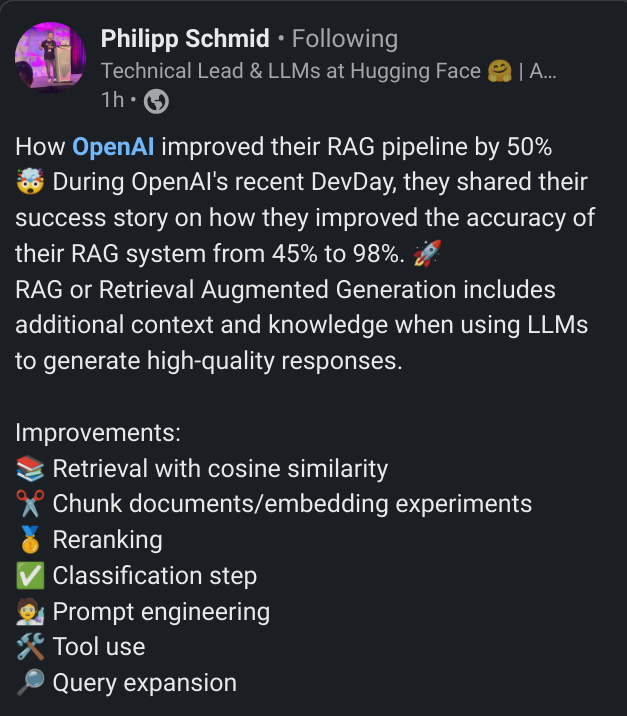

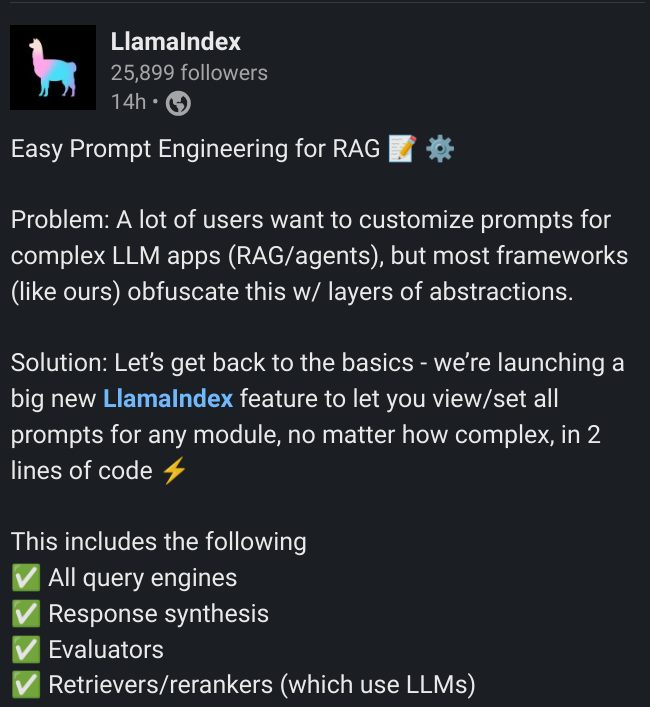

Prompt engineering for RAG // customize prompts for complex LLM apps (RAG/agents)

Update from OpenAI DevDay (How OpenAI improved RAG):

Accessing/Customizing Prompts within Higher-Level Modules Retrieval Augmented Generation (RAG)

However, as a product manager who has been recently putting RAG products into a production environment, I believe RAG is still too limited to meet users’ needs and needs an upgrade.

Twist for better reasoning

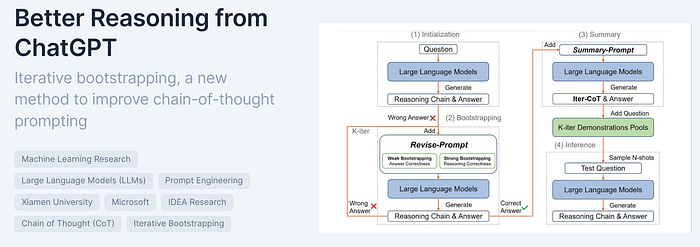

Key insight: Researchers have developed a few ways to prompt a large language model to apply a chain of thought (CoT). The typical method is for a human to write an example CoT for inclusion in a prompt. A faster way is to skip the hand-crafted example and simply instruct the model to “think step by step,” prompting it to generate not only a solution but its own CoT (this is called zero-shot CoT). To improve zero-shot CoT, other work both (i) asked a model to “think step by step” and (ii) provided generated CoTs (auto-CoT). The weakness of this approach is that the model can generate fallacious CoTs and rely on them when responding to the prompt at hand, which can lead to incorrect responses. To solve this problem, we can draw example prompts from a dataset that includes correct responses, and the model can check its responses against the dataset labels. If it’s wrong, it can try repeatedly until it answers correctly. In this way, it generates correct CoT examples to use in solving other problems.

// save for future hacks…